The 24-Million Page Problem

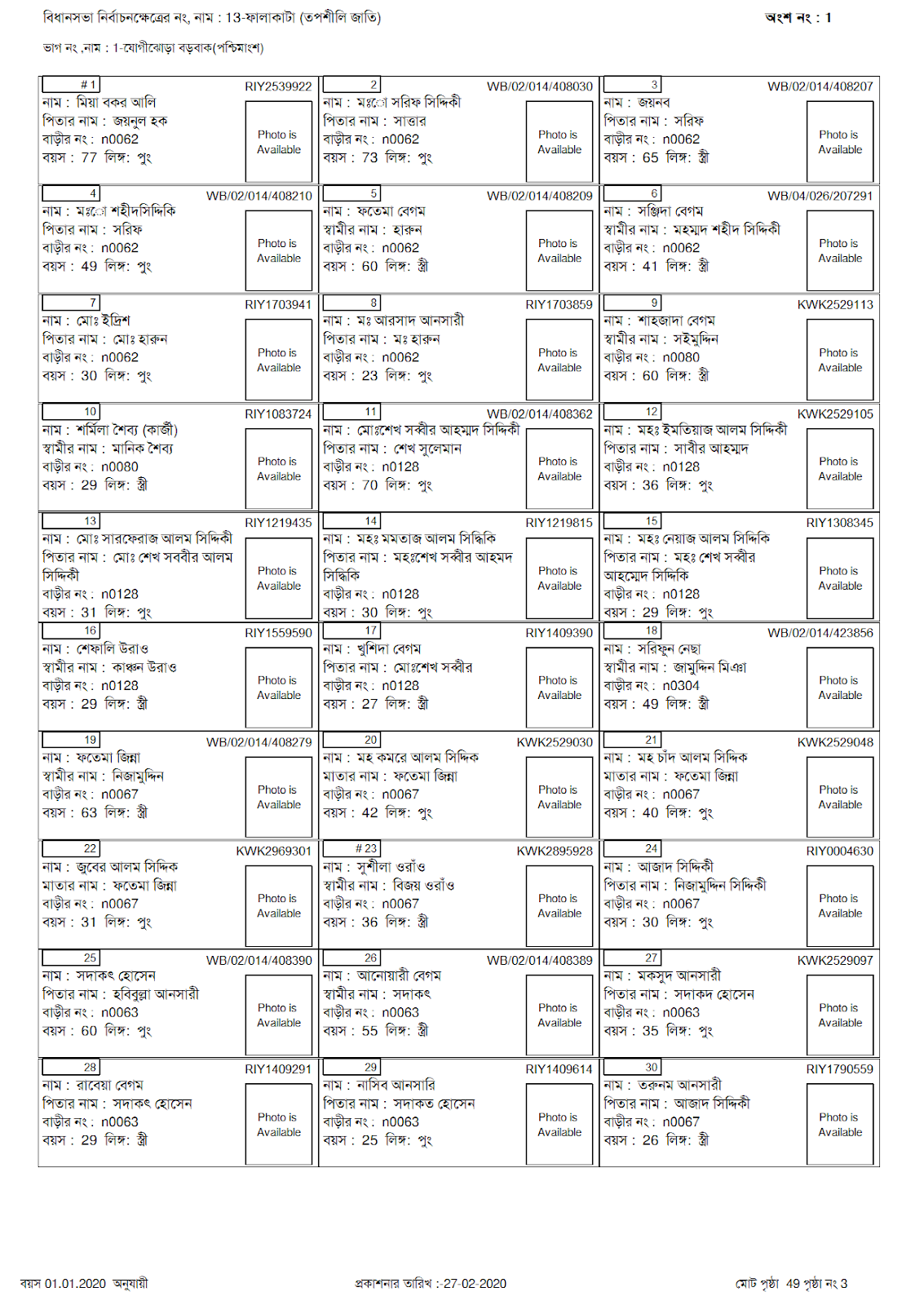

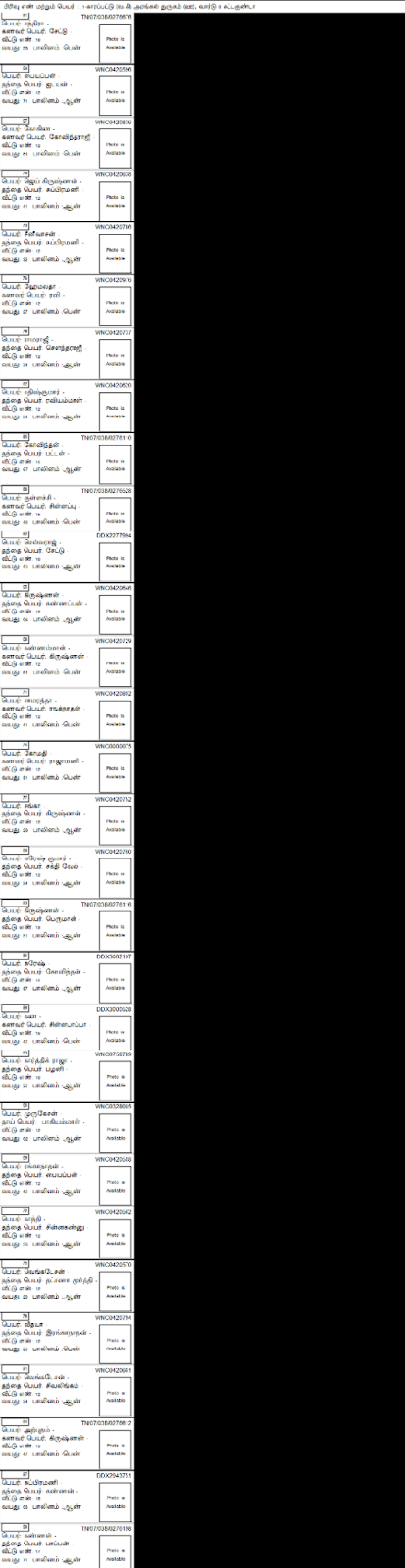

The client approached us with a massive dataset: 600,000+ PDF files containing Indian voter rolls. These weren't clean, text-based PDFs. They were scanned images—roughly 24 million pages in total.

The sheer volume was the first challenge. The second was the layout. Each page contained voter data organized in a 3-column grid.

Why Standard OCR Failed

We initially tested standard OCR tools like Tesseract. They failed catastrophically. Because the data was in a grid, the OCR engine read the text left-to-right across the columns, jumbling data from Column 1 into Columns 2 and 3.

The output was unstructured chaos. We needed a way to force the OCR engine to read "down" before reading "across."

The Solution: The "Crop-and-Stack" Algorithm

Since we couldn't change the OCR engine's behavior, we changed the input data. We developed a custom pre-processing algorithm that:

- Detects the column boundaries on every page.

- Crops the three vertical columns into separate images.

- Stacks them vertically to create a single, long strip of data.

This simple geometric transformation linearized the data. When we fed this "stacked" image into the OCR engine, it read the data perfectly from top to bottom.

Architecture: Serverless Scale

Processing 24 million pages sequentially on a single server would have taken years. We needed extreme parallelism. We architected a solution using Google Cloud Functions.

The Pipeline Workflow:

- Ingestion: The end user uploads a PDF path to our Command Line Interface (CLI) hosted on a Google Cloud VM.

- Splitting: A Bash script splits the PDF into individual pages and discards metadata pages (like the cover).

- Trigger: Each page upload triggers a Python-based Google Cloud Function. This serverless function performs the "Crop-and-Stack" operation instantly.

- OCR: The transformed images are sent to the Google Cloud Vision API, which handles the heavy lifting of character recognition.

Bare-Metal Parsing with Bash & Awk

Once we had the raw text, we needed to parse it into structured CSVs (Voter ID, Name, Age, etc.). We found that Python's text processing overhead was adding up across millions of files.

We dropped to the shell. We wrote custom parsing scripts using awk, grep, and sed. These Unix tools stream-processed the text files at disk-read speeds, parsing the structured data orders of magnitude faster than high-level languages could.

Data Enrichment: Transliteration & Demographics

The raw data contained names in regional vernacular languages (Hindi, Gujarati, Bengali). To make this data globally queryable, we needed it in English.

We implemented a Transliteration Engine using the Google Transliteration API. Unlike translation (which interprets meaning), transliteration preserves the phonetic sound of proper nouns (e.g., "सूरज" becomes "Suraj," not "Sun").

Finally, we applied a proprietary algorithm to analyze the names and identify demographic data, such as religion, adding a layer of analytical value to the raw extraction.